AI’s Intense Energy Demands and How Local Governments Can Take Action

There are concrete actions that local governments can take to combat AI's growing energy problem and help shape a more sustainable AI future.

By Leila Doty, Eleonor Bounds, & Lindsey Washburn

When you use ChatGPT, have you ever wondered about the impact you might be having on the environment?

Generative AI (GenAI) tools offer promising opportunities to help governments conduct business and deliver public services more efficiently. At the same time, the data centers powering GenAI technologies can significantly impact the environment. In particular, the energy demands of GenAI are so great that experts warn that our current energy infrastructure won't be able to support increasing demand.

In this installment of the “AI & the Environment” blog series, we explore the energy impacts of GenAI, why public agencies should care, and what local governments can do to address the problem. Our aim is to spark conversation and action from those in local government so that together, we can work towards shaping a more sustainable future for AI where the benefits outweigh the costs.

AI & the Environment

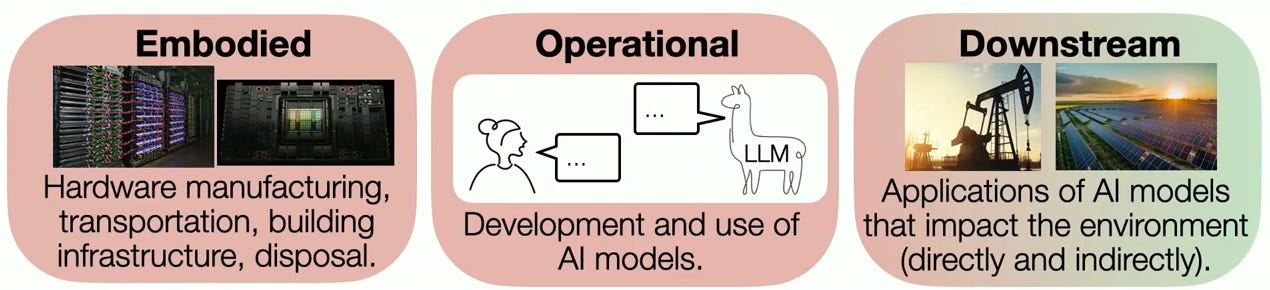

How does AI impact the environment? The environmental impacts of AI can be categorized into three main types: embodied, operational, and downstream.

Figure 1: Three categories of environmental impact from Dr. Emma Strubell’s keynote presentation at the Markkula Center for Applied Ethics AI and the Environment event.

Embodied impacts are the environmental effects associated with an AI model, from the extraction of raw materials to the end-of-life disposal. This can include resource extraction, manufacturing, transportation, and construction.

Operational impacts occur during the development and use of an AI model. This includes impacts during the model training process and when the model is deployed by users.

Downstream impacts can be positive or negative and refer to the direct or indirect impacts felt from the application itself of the AI model.

This blog series will cover the energy, water, and public health impacts of AI. Today, our focus is on energy.

AI is an Energy Hog

GenAI uses a lot of energy.

Training models like GPT-3 can use roughly 1,300 megawatts per hour of electricity. This is the equivalent to the annual consumption of 130 U.S. homes.

A single ChatGPT query consumes approximately 2.9 watt-hours of electricity, roughly 10 times more than a traditional Google search (an incandescent light bulb uses 60 watt-hours).

A typical AI data center uses as much power as 100,000 households.

The amount of energy used by GenAI depends on the type of content that is generated.

Figure 2: Tasks examined in a 2024 research study, “Power Hungry Processing: Watts Driving the Cost of AI Deployment?“, and the average quantity of carbon emissions they produced for 1,000 queries.

Figure 2 shows the average carbon emissions across a variety of GenAI tasks from a recent study by researchers at Hugging Face and Carnegie Mellon University. The researchers found that image generation was by far the most energy-intensive task. For example, generating a single image uses as much energy as it takes to fully charge your smartphone. A text-based query only uses 16% of a full smartphone charge. Generating 1,000 images with a powerful AI model produces as much carbon dioxide as driving four miles in an average car.

The rise of GenAI development and use has even caused some companies to regress on their climate commitments. Microsoft's emissions are up 29% from their 2020 baseline. According to their annual report, the rise in emissions primarily comes from the construction of more data centers and the associated embodied carbon in building materials, as well as hardware like semiconductors, servers, and racks.

Why do GenAI models use so much energy? The short answer is that it’s a combination of training the model and ongoing model use after deployment.

Training: To improve AI capability, developers train models on as much data as possible, increasing the size of the model roughly in proportion with the amount of available data. This increases the energy required to power the computation.

Inference (use/query): The energy costs from AI inference are ongoing after model deployment. Each time your Google search includes an AI summary at the top of your search results, that is a query. Each time you prompt ChatGPT, that is a query.

An important distinction to note is the difference in energy use between model training and inference. You might assume that training the AI model is the most energy-intensive. In reality, research has found that AI inference typically consumes more energy than training due to its continuous and often high-volume operation. In other words, training a GenAI module uses a lot of energy. But once that model is available for commercial use, the total energy demands of each query made by users far outweigh that of the training stage.

The arrival of DeepSeek, a free open-source large language model (LLM) created by a China-based AI company in 2023, initially showed promise of being significantly more energy efficient than OpenAI and Anthropic’s models. However, further investigation has found that those initial reports may have been misleading. While DeepSeek uses far less electricity during the training process compared to other similar LLMs, that energy saved in training seems to be largely offset by its more intensive techniques used to answer queries.

While researchers continue to hone in on exactly how much energy DeepSeek uses in comparison to its competitors, we know one thing for certain: GenAI models will continue to guzzle energy at an exponentially increasing rate.

Impacts on Local Communities

To meet these skyrocketing energy demands, AI companies are going the extra mile. Or in Microsoft’s case, three miles – in September 2024, Microsoft inked a deal to reopen the infamous Three Mile Island nuclear power plant in Pennsylvania. The deal gives Microsoft all the electricity from the reactor over the next 20 years, which is needed to power the tech giant’s AI and cloud computing products.

It’s not just Microsoft seeking additional power sources. Amazon and Google have both signed agreements to use nuclear plants to power their data centers. Former Google CEO, Eric Schmidt, recently told Congressional lawmakers on the House Energy and Commerce committee that he wasn’t picky about where the power comes from. “Energy in all forms, renewable, nonrenewable, whatever…needs to be there, and it needs to be there quickly,” said Schmidt. In addition, earlier this month, President Trump signed an executive order to boost the U.S. coal industry, in part to fuel AI data centers.

Despite these efforts to meet growing data center energy needs, current U.S. energy infrastructure simply may not be able to keep up with the rapid pace of increasing electricity needs. The World Economic Forum estimates that the computational power needed for sustaining AI's growth doubles roughly every 100 days. Microsoft claims the rate is every six months. At any rate, local communities are already feeling the impacts.

As AI data centers strain the energy grid, the bills rise for everyday customers. When a new data center is built in the region, its intense electricity demands can drive up the cost of local utilities for ratepayers. In Georgia, customers saw six power rate increases in the span of just two years. For everyday consumers, each rate increase can dramatically raise their monthly bill. With at least 36 states offering significant tax breaks for data centers, many ratepayers feel they’re being made to subsidize multi-billion dollar companies like Google and Amazon through their utility bills. To protect consumers, lawmakers from both sides of the aisle have introduced legislation in Georgia, Virginia, California, Oregon, and Texas to shield ratepayers from unfair rate hikes.

Data centers are often touted as engines for job creation. In an interview with Tamara Kneese, Director of Data & Society’s Climate, Technology, and Justice Program, she shared that, in reality, “data centers are not factories; they're not providing a lot of jobs….in fact, most of the jobs that are tied to data centers are in the construction phase.” Once construction is complete, the data center may actually only employ a handful of highly specialized roles, often filled by people who are brought in from other regions. “It may not even be benefiting local workers and local communities,” said Kneese. “And so the tax revenue that would have gone to local schools or public transit is then given back to these tech companies that are getting a big break for building something that is not actually a boon to the community.”

In Virginia, the world's densest data center hub and home to “Data Center Alley,” developers, lawmakers, and residents are grappling with the reality of being unable to meet growing electricity demands. Canary Media estimates that Virginia would need to construct twice as many solar farms per year by 2040 as it did in 2024, build more wind farms than all the state’s current offshore wind plans combined, and install three times more battery storage than the state’s biggest utility intends to build to meet growing demands. Even then, Virginia would still need to build at least seven new fossil fuel plants. Elected officials and their constituents are concerned about how rapid data center growth will affect their daily lives. “If we fail to act, the unchecked growth of the data center industry will leave Virginia’s families, will leave their businesses, footing the bill for infrastructure costs, enduring environmental degradation, and facing escalating energy rates,” Virginia state senator Russet Perry recently stated.

To address these energy challenges, it’s time for local governments to take action.

What Local Governments Can Do

Though the scale and urgency of the issue may seem daunting, there are many meaningful actions that local governments can take to help address the energy impacts of AI. Local governments, with their significant procurement and regulatory power, are vital to a robust approach for making GenAI sustainable.

Local governments should promote more sustainable GenAI practices by: 1) demanding transparency from AI suppliers, 2) requiring greater transparency and energy efficiency standards for data centers, and 3) promoting responsible practices around the use of GenAI.

Demand greater transparency from AI suppliers around their product’s energy consumption.

Policymakers need better information about the amount of energy consumed by AI before they can take evidence-based action. Relying on companies to voluntarily provide this data has not been effective. Local governments can play an important role by demanding greater transparency from AI vendors.

To this end, local governments should leverage the public procurement process. The ability to set requirements for vendors and refuse purchase of solutions that do not meet their standards is a powerful policy lever. By requiring AI companies to disclose the energy consumption of their AI products and not buying AI tools that are not environmentally sustainable, agencies can begin to collect this important information and incentivize AI companies to be more environmentally conscious.

The GovAI Coalition recently released a new version of the AI FactSheet that includes a section for vendors to disclose the environmental impacts of their AI product. Agencies should implement the AI FactSheet and require prospective vendors to share “energy and water consumption for training and/or per use of the model, sources of energy and water for [their] solution, and [their] organization’s approach to reducing environmental impact” during the procurement process. If the vendor shares unsatisfactory numbers or even outright refuses to disclose this information, agencies should refrain from contracting with them, to the extent possible.

For vendors who are unsure of how to measure their energy impacts, the AI Energy Score offers an approach. Hugging Face recently launched the AI Energy Score project to help standardize energy measurement for AI models and enable comparison of energy consumption for different tasks. The project offers a benchmark that can empower local governments and policymakers to enforce efficiency standards.

In addition, some legislators have begun to take action at the state level to promote greater transparency. For example, AB 222, introduced by Assemblymember Bauer-Kahan in California, would require developers to calculate the total energy used to develop their GenAI model and disclose it publicly on their company website. In Virginia, HB 2035 would require data centers to report quarterly on water and energy use. With accurate reporting of carbon impacts, policymakers will be empowered to perform cost-benefit analyses on various use cases for GenAI and determine which applications are worthwhile.

Require greater transparency from data centers and establish energy efficiency standards.

AI and data centers are here to stay. So, how can we create sustainable energy systems that can continue to meet the growing energy demands not just of AI, but the increasingly digital world that we live in? Though not the whole solution, part of the answer lies in achieving more transparency and establishing energy efficiency standards.

Local governments should demand greater transparency around the energy consumption of data centers in the form of regular reports on electricity use. In an interview with Irina Raicu, Internet Ethics Program Director at the Markkula Center for Applied Ethics, she emphasized this need for better transparency. “Relying on companies to voluntarily disclose their energy and water consumption has not proven to be effective…we need [reporting] requirements because you can't form effective policy without that information,” shared Raicu. To respond effectively, governments need precise and reliable information about electricity demands.

In talking with Raicu, she also offered the important reminder that it’s not just about AI, but that “we rely on data centers for everything we do online.” Without functional data centers, modern society would struggle to operate. “If AI becomes this sort of algae bloom that kind of spreads into all of the data centers and all of the ecosystems and uses up a lot of energy,” said Raicu, “it impacts everything else that we do digitally as well.”

To that end, we need a strategy to sustainably power data centers in the decades to come. Energy efficiency and renewable sources will be a critical component of the solution. Where possible, local governments should establish and enforce energy efficiency standards for data centers.

When approving the construction of new facilities, local public authorities (e.g., zoning and permitting bodies) should consider including conditional requirements around the energy efficiency of the hardware, software, and cooling techniques used by the data center. Authorities should also consider requirements for the data centers to integrate with renewable energy sources, including solar, wind, and geothermal energy. In 2024, the U.S. Department of Energy’s Center of Expertise for Energy Efficiency in Data Centers published a guide on best practices for energy efficient data center design, which may be a helpful resource for local governments as they negotiate the construction of new data centers.

There has been action at the state level to institute energy standards for data centers. In California, AB 222 would direct the California Energy Commission to “adopt efficiency standards for data centers.” This year in Virginia, HB 2578 was introduced (though did not advance) and would have required data center operators to meet renewable energy standards to be eligible for tax exemptions.

Educate your workforce and residents about the responsible use of GenAI.

Despite the widespread use of GenAI, many users – and decision-makers in government – are unaware of the environmental impacts. But without greater awareness, people won’t be equipped to make responsible and informed decisions about whether (or not) to use GenAI for a given task. According to Kneese, “there's an obvious need for more education around what the actual impacts of data centers are,” especially among those in government with the power to approve the construction of new data centers. “I think breaking through the myths is one important starting point,” said Kneese.

One of the most impactful actions local governments can take is simply educating their workforce and broader community about this issue (that’s exactly what we’re trying to do through this blog). Agencies can incorporate this education into existing AI training for employees.

Some agencies are also working to update their AI policies to specifically instruct employees to consider the environmental impacts of their GenAI usage. For example, the City of San José recently updated its GenAI Guidelines to include a section about how employees can reduce the environmental impacts of their ChatGPT use. The updated guidelines encourage employees to minimize the number of prompts they use, direct ChatGPT to generate shorter output, and only use GenAI when other tools will not meet their needs.

As we shared above, much of the energy consumption for GenAI can be attributed to inference, or ongoing usage/queries, rather than the training of the model. Unlike training, inference costs are ongoing and scale with usage. This means that an individual’s choices around how they use GenAI really matters. Reducing your query count is not the GenAI equivalent of using plastic straws. Unlike the largely debunked myth of conscious consumerism that was funded by big oil, in the case of GenAI, individuals actually have the potential to make a meaningful reduction in overall energy consumption when they alter their behavior.

The intense energy demands of AI data centers is an urgent problem. It’s time for local governments to take action and help shape a more sustainable AI future.

We hope you found this blog informative, and take with you the following:

GenAI uses exorbitant amounts of energy. Different types of GenAI outputs (i.e., text, image, video) have differing rates of energy consumption (images and video are the worst). Local energy grids will soon be unable to meet these massive energy demands and communities will feel the economic impacts.

AI is here to stay, and we argue that its responsible and informed use is vital to a sustainable AI future. Institutions and individuals must take accountability for the environmental impacts of their AI use and use AI with clear purpose. A responsible AI user is informed of the environmental impacts of their AI use and is able to weigh the costs and benefits of using GenAI for a given task and act accordingly.

It’s time for local governments to take action. If we want GenAI to be sustainable, we need greater transparency and standards around energy consumption of GenAI and data centers. Some possible approaches for public agencies:

Require GenAI suppliers to disclose their energy footprint via the AI FactSheet, or another AI nutrition label tool.

Require data centers to report their energy usage and comply with efficiency standards.

Educate your workforce to make responsible decisions around their use of GenAI.

Thanks for reading and see you next month for our discussion on water.

About the authors

Leila Doty is a Privacy and AI Analyst at the City of San José.

Eleonor Bounds is a Senior Analyst in Data Privacy and Responsible AI at the City of Seattle.

Lindsey Washburn is the Program Manager for Strategic Initiatives at the City of Tigard, Oregon.

Note: The opinions expressed in these articles are solely those of the author(s) and do not necessarily reflect the views, positions, or policies of the GovAI Coalition or the authors’ affiliated professional organization(s).

—

This is the blog for the GovAI Coalition, a coalition of local, state, and federal agencies united in their mission to promote responsible and purposeful AI in the public sector.